Gathering Requirements and Designing a Data Warehouse

How do you gather requirements for a Data Warehouse/Business Intelligence project?

Typically, on a BI project, if you ask a business user, ‘what do you want’ you will get one of 2 responses.

- I don’t know.

- I want everything.

Which are, effectively, the same thing…

As much as it pains IT people to hear these responses, if you think about it, they are actually the right answer. From day to day, a business user, especially any analyst in a business, doesn’t know how they will want to view and analyse their data. They are responding to changing business environment on a day to day basis. The BI team’s job is to deliver the capability to analyse data in a variety of ways to these business users.

Gathering requirements for a Data Warehouse project is different to Operational systems. In Operational systems, you can start with a blank sheet of paper, and build exactly what the user wants. On a Data Warehouse project, you are highly constrained by what data your source systems produce. Therefore letting an end user go wild with all kinds of esoteric requirements can lead to horrible disappointment.

There is no silver bullet. Like on most projects, you have to work with what’s in front of you. Organisations undertaking BI projects all start with very different levels of vision, understanding and expertise. Each situation calls for a unique approach. However, one thing we find is true is that the requirements gathering process is as much an education process as it is a requirements gathering exercise. Most business users are unaware of the possibilities of BI, and will often describe their requirements in terms of what they have had before (e.g. A list all sales for June that I can import into Excel…)

Building a Data Warehouse is mostly about building capability, rather than delivering specific report outcomes. It’s a mistake to take a ‘Business Intelligence’ requirement (i.e. this Report, that report… etc) and build a data Warehouse just to satisfy the reporting requirement. Tomorrow, a new requirement might arise, which would fundamentally change the Data Warehouse (Usually the detail level, known as the grain, of a Fact table).

In the next sections, we outline 3 different approaches to gathering business requirements for a data warehouse.

Ontology

The mantra for Data Warehouse design is “Model Reality”. In previous lessons, we have discussed how Fact represent Business Events/Processes and Dimensions represent business entities.

The natural way to understand the requirements of the Data Warehouse is to simply describe the Business Entities and Processes.

To do this we recommend writing an Ontology. An Ontology is a big word for a simple concept:

- Ontology: “a set of concepts and categories in a subject area that shows their properties and the relations between them.”

Keeping things simple, we recommend creating a document with the following format:

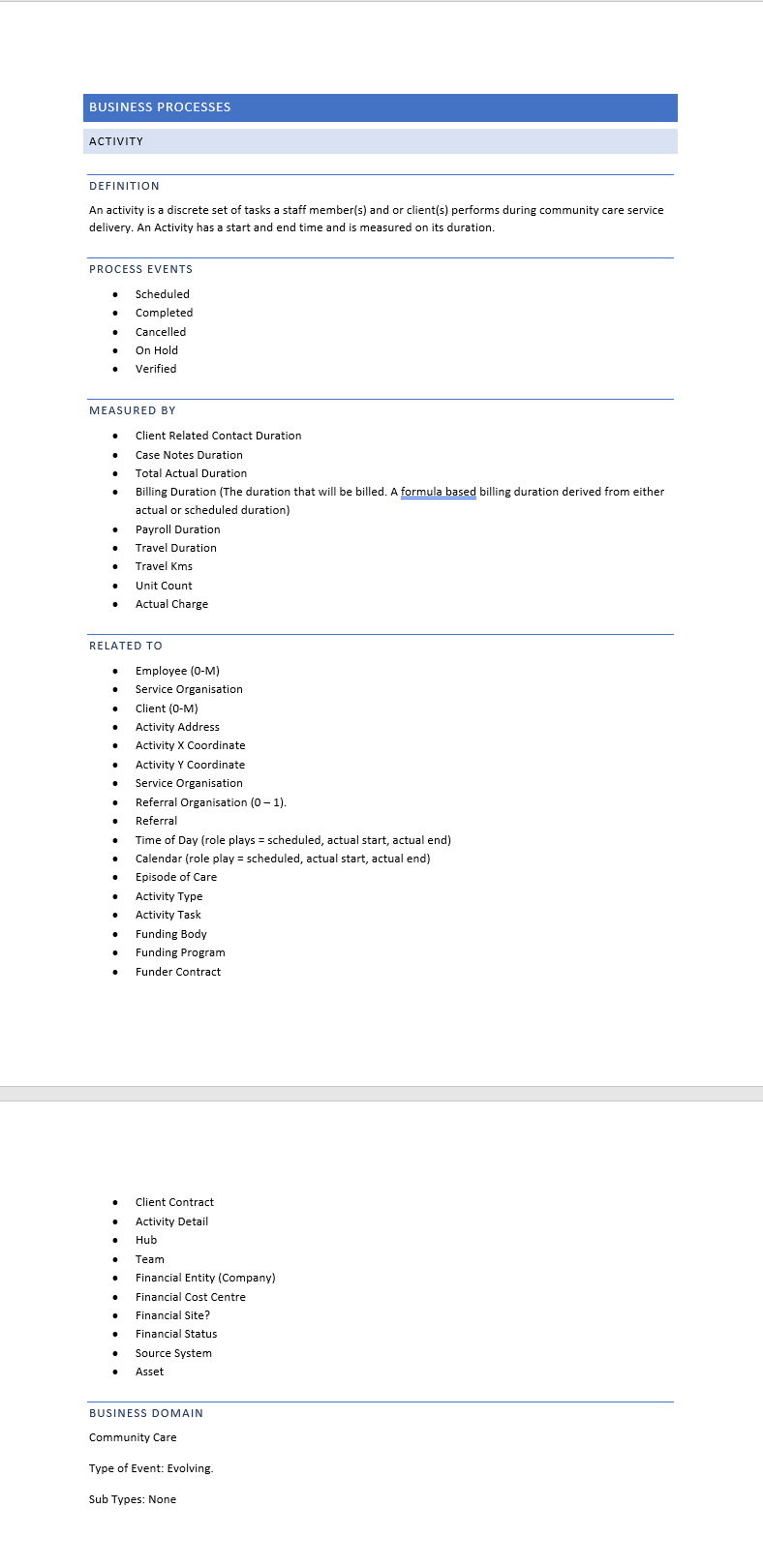

Business Process

Below is an example of a Business Process description in an Ontology document:

- Definition: Provide a business definition of the business process.

- Business Events. If the business process is evolving, provide a list of business events.

- Measured By. List how the process is measured. Obviously, these become the measures in your Fact.

- Related To. List the business entities that represent the who, what, where, when and why of the business process. Give a cardinality where it makes sense.

- Business Domain: The business domain this entity belongs too. It’s an indication of what data mart the entity will belong too. If you want to keep conformed dimensions in a Master Data Mart, then, set this value to master.

- Type of: If this entity is a subtype of another entity, name the other entity here.

- Subtypes: The list of subtypes of this entity.

A business process will become a Fact in the Data Warehouse.

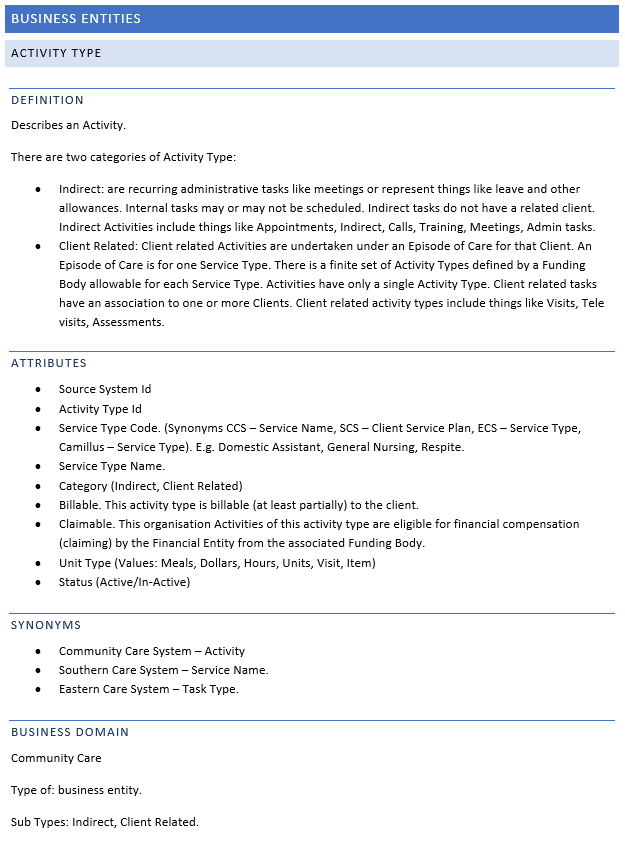

Business Entities

Below is an example of a Business Entity description in an Ontology document:

- Definition: Provide a business definition of the entity. Keep this definition relatively concise. Be careful not to wander into describing other entities and processes in the business.

- Attributes: Include a list of attributes of the entity. Provide example values where possible.

- Synonyms: Where it’s possible to identify the same entity in a source system, name the same entity as a synonym. This can help understanding, especially if you have multiple systems providing the same role.

- Business Domain: The business domain this entity belongs too. It’s an indication of what data mart the entity will belong too. If you want to keep conformed dimensions in a Master Data Mart, then, set this value to master.

- Type of: If this entity is a subtype of another entity, name the other entity here.

- Subtypes: The list of subtypes of this entity.

A business entity will become a Dimension in the Data Warehouse.

Model Storming

A very similar approach, with much more detail about how you arrive at these definitions is described by Lawrence Corr in his book Agile Data Warehouse Design: Collaborative Dimensional Modeling, from Whiteboard to Star Schema. It is a step-by-step guide for capturing data warehousing/business intelligence (DW/BI) requirements and turning them into high-performance dimensional models in the most direct way: by model-storming (data modelling + brainstorming) with BI stakeholders. It describes BEAM✲, an agile approach to dimensional modelling, for improving communication between data warehouse designers, BI stakeholders and the whole DW/BI development team.

Data Driven Design

Bottom Up “Data Driven Design” is also an exercise in “Modelling Reality”.

Data Driven Design refers to a design technique that focuses on examining the data and database schema of Source Systems to understand the Business Processes and Entities of the Business. The fact is that the Business process and entities exist in the source systems, in detail.

A forensic examination of the source systems can reveal the business processes and entities.

Data Warehouse projects have certain characteristics that make them suitable for Data Driven Design. The key characteristic is that Data Warehouse projects are highly constrained. They are constrained by the data contained in the source systems of the Data Warehouse, and, from a requirements perspective, a Data Warehouse is constrained to modelling existing business processes (other than perhaps reporting and management processes).

Unlike an operational system project where development is about new or changing business processes, it is not realistic to get requirements about, for instance, what attributes of a Customer that need to be captured, and then model into a Data Warehouse Data Model. First… the underlying Source System may or may not support the attributes and secondly it is easier to go directly to the existing Source System and discover them.

Data Driven Design will shortcut the requirements process. Clearly existing Business Process will be manifest in one or more Source Systems, and can be ‘discovered’. Thus a Data Driven Design approach can be taken, using existing data to derive a design for the Data Warehouse. Data Driven Design doesn’t mean ignoring business requirements all together. To the contrary the business needs to be brought along on the journey, but only limited and specific input and prioritization is required from the business in the early iterations. In later iterations the business will be better equipped to properly articulate their requirement.

Overview of Data-Driven Design for Data Warehousing

Below is an outline of Data-Driven Design and Agile Methodology applied to Data Warehousing.

First Iteration

The first iteration is a little different. It is as much a business education process, as it is a development exercise. The four steps of the process are described below. They will occur in roughly this order, but it’s likely that some steps will occur simultaneously and repetitively as required.

- Discover the Business Processes that are included in the project scope. Data Driven Design doesn’t mean ignoring business requirements altogether. To the contrary, the business needs to be consulted constantly and brought along on the journey. A detailed description of the Business Process is not required. Just a simple accurate story card for each Business Process and Business Event, identifying the key entities involved (The Who, What, Where, When and Why) and how the Business Events are measured. For example… an Ontology! The identified Business Processes need to be prioritized in a business value order. This order needs to be weighed against the technical complexity of each Business Process to decide which Business Processes will be implemented in the first iteration of the project. Try to choose a relatively high value and simple Business Processes for the first iteration.

- Discover the Source Systems for the selected iteration 1 Business Processes and get access to their database/files etc. Having real-life data is important, and can sometimes be a challenge.

- Discover within the Source System database(s) the manifestation of each of the Business Process. This will require communication back and forth with the business representatives. Think of yourself as a data detective. A forensic data detective, CSI style! Quite often Source Database tables and columns are well named and easy to understand. In many cases, the Database schema is undecipherable. Many packaged ERP suffer from this. Every Holmes needs a Watson. Collaboration with a data-focused Subject Matter Expert (SME) or experienced Source System programmer is essential. A data focused SME will have experience with the underlying data, perhaps learned from extracting data from the Source System Database for reports. This step will identify the tables, columns, data and grain of data involved in each Business Process. It should also discover various cases and examples of the Business Process within the data. Save these cases if possible, they become useful for testing later. Physically, this step will involve a lot of data profiling and querying of the Database. Other resources you will find useful are Source System user manuals, technical manuals, a data dictionary or existing reports that extract data.

- Develop a first cut Data Warehouse Data Model and ETL based on what has been discovered. The Data Model will contain only those tables required for the first iteration but must conform to good Data Warehouse design principles, so that the model can be easily expanded in the future. It is absolutely essential that a next generation Data Warehousing tool like Dimodelo Data Warehouse Studio is used to develop the Data Warehouse and ETL. The Data Warehouse and ETL is going to go through many iterations, much change, plenty of regression testing and several releases. Short iterations are just not feasible without a tool like Dimodelo Data Warehouse Studio.

The idea of the first iteration, and to a lesser extent, other early iterations, is to give the business a first look at the Data Warehouse. This helps to get them thinking dimensionally and improve their ability to articulate requirements. Gathering requirements is traditionally a major issue in Data Warehouse projects. The first iteration is an education process, helping the business understand the capabilities of BI. We recommend you demonstrate standard reports, dashboards, scorecards and ad-hoc analytics. Only deploy the first iteration to a sandpit environment. Somewhere the users can ‘play’. Keep expectations of data accuracy low, it’s unlikely you will nail the data 100%. It’s possible you may even need to start from scratch again, but that’s not an issue with Dimodelo Data Warehouse Studio or other similar tools.

Subsequent Iterations

Rinse and Repeat. Subsequent iterations follow the same process as the first iteration but the focus of subsequent iterations is to improve and refine the existing solution based on feedback from previous iterations. Only introduce new functionality for new Business Processes when resources allow.

Subsequent iterations introduce additional Agile disciplines, like Test Driven Development, Continuous Integration, Source Control and Release Management, each of which could fill several blog posts on their own. It does suffice to say, automation is the key.

It’s likely that subsequent iterations become release candidates for release into production. Again discipline is required. Releasing a Data Warehouse into production is not an easy task, especially if there is an existing version already in production. At this point, it is also important to focus on non-functional requirements like security, disaster recovery etc. Usually, the planning for non-functional requirements would have already occurred, and there is nothing wrong with that. However, where there is a fairly standard architectural scenario, there is an argument that it may not be wise to invest time and money into the architectural tasks until a viable Data Warehouse and BI solution that meets the business needs has been proved.

.. Now… if only we could get the PMO, the Op Ex and Cap Ex budgets, and the business to align with the uncertainty of an Agile project… A whole other blog post again.

The Criticism of Data Driven Design

In the past Data Driven design has been used on Data Warehouse projects to the exclusion of all other techniques, and without using an Agile project methodology or Agile Data Warehouse development tools like Dimodelo Data Warehouse Studio. User involvement was avoided or minimized (which suited IT). It was mistakenly thought that Data Warehouse development meant simply re-modelling multiple source systems into a single Data Warehouse model. Only after the system was built, using inefficient, error-prone, time consuming and inflexible manual coding practices were the users engaged properly. Unfortunately, more often than not, the results miss the mark and failed to answer the business questions. With an inflexible ETL framework that takes months to make any significant change, the project team found themselves in trouble, and potentially out of budget.

The real problem was the time the development team took to develop the solution and the inflexible nature of the resulting system. Shorten the development cycles, use a Data Warehouse Automation tool, call the result a prototype, and a paradigm shift occurs. Iterate multiple times to improve and refine the application to the business requirements.

Why use the Agile Methodology for Data Warehousing Projects?

Many IT projects fail. Depending on who you believe, its anywhere between 50% and 80% (See references below). Shocking! I believe that you need to take these figures with a grain of salt. It depends on your definition of failure (See discussion on failure below).

It has also been shown that small projects are more successful than large projects and those projects using the Agile methodology are also more successful (See references). Given that small agile projects are the most successful and that Gartner states that over 70% of BI projects are not successful, it follows that applying an Agile project methodology to a Data Warehouse project is a sensible strategy. Data Driven Design is a perfect companion for Agile.

Failure Rate References:

Small projects are more successful than large projects references:

- Gartner Survey Shows Why Projects Fail

- Failure of large projects – why it is better to be small particularly in IT

Agile project methodology is more successful references: - Ambysoft Project Success Rate Survey

- Agile Business Intelligence Would Be A Good Idea

- Agile succeeds three times more often than Waterfall

Definition of Project Failure

The definition of project failure is usually a project that has a combination of the following issues: significantly over budget, significantly over time, doesn’t deliver full functionality, or is cancelled outright. However, I think it’s more subjective than that, and depends on project context. If a Home building project went over budget 20% or time by 20%, but at the end of the project, the house was everything the client wished for… is this a failure? The answer – depends… For a more in-depth understanding of the subjective contextual nature of project failure, you can read The rise and fall of Standish Chaos Report figures.

Testing your Design

This is an essential step that will improve your design.

How do you test your design? No first cut design stands up to the appearance of a report! Designing a data warehouse to cater only for existing reports, spreadsheets and analysis is a mistake. However, you should test your design against those existing reports etc.

Can you produce those reports from your data warehouse? Inevitably the answer will be no. You will discover new calculated measures, hierarchies and different aggregations needed to respond to these requirements. You may discover you need new periodic snapshot tables or entirely different facts from different domains.

Conclusion

In my experience, the Ontology method is the most successful way of defining Data Warehouse requirements.

If you want to take this exercise even further I would recommend the Model-Storming approach. Indeed, I would highly recommend at least reading the Laurence Corr’s book. It will improve how you write an Ontology.

The Ontology exercise will most likely be informed by a Data Driven Design approach. Because your source systems are the manifestation of your business process and entities, it is likely you will discover the processes and entities you need to document through Data-Driven analysis.

Once the ontology is complete you then use it to define your Data Warehouse Matrix. This then becomes your high-level design document, project scope and key project planning and communication tool.

I highly recommend you undertake this style of requirements gathering before starting the build of your data warehouse. I see many implementations dive straight into the design of facts and dimensions. The requirements gathering exercise is the missing link between the business and the design.

I’ve seen many experienced data warehouse developers encounter an ontology document for the first time and thank God for its existence! Literally.

It need not be a long exercise. A 3-day workshop with experienced data analysts, business analysts and/or SMEs is all you need to gather the information for a reasonably complex domain, plus perhaps 2-3 days to document. This document will keep delivering value, especially as the makeup of the team changes. It may be the only definitive definition of the business processes in existence.